By Matthew Brenningmeyer and Grace Reback - Lab Undergraduate Affiliates

Over the past few years, the speed at which disinformation is created, distributed, and consumed has become a growing concern for governments, businesses, and citizens. The spread of false information isn’t a new phenomenon, and the terms disinformation and fake news can be traced back to the 1950s. The rise of social media and distribution of information online has allowed people to produce and quickly spread false information through decentralized and distributed networks, vastly increasing its impact. Much of this information is posted with malicious intent and results in a potentially harmful impact on society. The result of this flood of disinformation is a new era of digital and political communication.

This new flood of disinformation creates several new challenges for those seeking its prevention. One major concern is the categorization and nomenclature related to disinformation and its surrounding topics. Literature on disinformation often uses a wide variety of terms interchangeably to refer to the same concepts. Words like fake news, misinformation, disinformation, lies, and hoaxes can all be used to refer to the general concept of intentionally spreading false information. This inconsistency makes it much harder to understand and track trends across various published works, as the grey areas in the terminology become increasingly significant.

Out of these words, the two most common are misinformation and disinformation. Misinformation is false or mistaken information that isn't deliberate. It is often seen as an honest mistake. Disinformation, on the other hand, is the deliberate spread of false information with the intention to dissuade and/or deceive. Fake news is another term used frequently and is often misused. President Trump is known for throwing the phrase out as a rhetorical tool rather than a legitimate identifier. One often-used definition of fake news is “news articles that are intentionally and verifiably false and could mislead readers.” By this definition fake news is a category of disinformation.

Another major challenge that every investigator and social media site face when removing disinformation is the timely removal of the false information. Many methods, such as Twitter’s flagging of disinformation, only address the disinformation after it has spread and caused the majority of its harm. Identifying and stopping disinformation before this spread occurs is one of the major focuses of research in this area. Understanding and being able to clearly communicate the trends and categorizations of disinformation is an important first step to catching this phenomenon before it can cause harm.

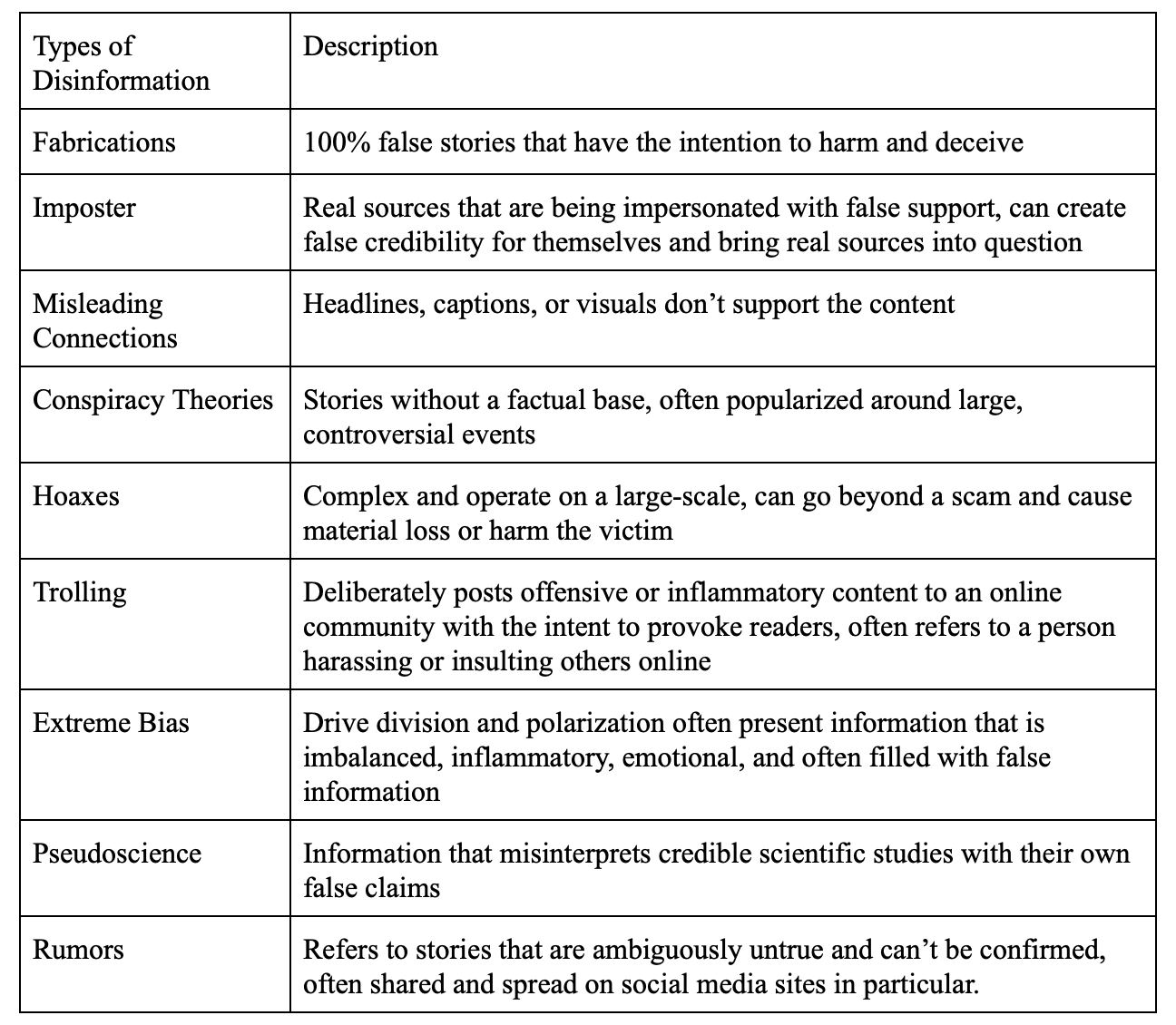

Disinformation is a large topic and there are many different types. Having a shared understanding of terms and definitions is essential as different disinformation terms may use different theoretical analysis. Some scholars identify as many as 11 categories of disinformation. In this blog we’ll be focusing on 9, as clickbait and fake reviews are often created and spread solely for profit and so are less useful for identifying trends. The remaining types include fabrications, imposters, misleading connections, conspiracy theories, hoaxes, trolling, biased, pseudoscience, and rumors. The following table outlines each type and its description.

Through this categorization we can begin to understand the boundaries of disinformation as a whole. Finding this line between disinformation, misinformation, and more vague terms like fake news is essential for identifying important trends present only in disinformation. We can also begin to look for similarities between the various types, finding smaller subcategories to understand why, when, and how different types of disinformation are spread.

There are three major motives at play across all types of disinformation, although profit-based motives rarely demonstrate any measurable trends. Ideological motives can lead individuals and sometimes countries to create a high volume of disinformation through a wide variety of mediums including social media, memes, and fake articles because they align with their own political or social views. Conversely, psychological motives often result in people making quick decisions based on emotion leading to disorganized, smaller instances of amplifying the spread of disinformation on platforms that emphasize speed, such as in a retweet of a false article’s headline or compelling hoax or conspiracy theory. This disinformation, whether it is being created or spread, targets a specific audience to ensure that its message continues to be amplified.

The medium that disinformation takes place in is just as important as the creator’s motive, with the most common places to find disinformation being social media, false articles, and forums. Many examples of disinformation are tailor-made for their environment, utilizing the fact that a social media website cuts off everything but the headline or the general chaos of an unmoderated anonymous forum. Even beyond this, a tweet or fake article can have a much different impact than a faked picture, video, or soundbyte. The ideal medium that disinformation is eventually created in is invariably determined by the target demographic.

Another important trend is the timetables for the creation and dissemination of disinformation. This can be closely linked with motive, as disinformation will often be most prominently spread around major events involving individual decisions by the populus. The most prominent of these events is the presidential election, but events like COVID-19 vaccinations and even national tragedies can create their own waves of hoaxes, conspiracy theories, pseudoscience, and every other type of disinformation. These events are often divisive, inspiring intense emotional reactions that disinformation creators can capitalize on. These new demographics can be wedged further apart through disinformation or used to amplify and spread a twisted message or outright lies.

The culmination of these trends is the creator’s objective. Through an analysis of the motives, mediums, and timetables of the disinformation, one can identify the targeted demographic and hypothesize about the intent of the creator. Similarly, understanding the intent and target demographic of the creator can help one understand the likely mediums, motives, and timetables of the creator. Identifying these links in past disinformation campaigns can help scholars and practitioners understand the likely attack vectors of disinformation creators before their first post, image, or article.

Project funded by the Commonwealth Cyber Initiative - “Exploring the Impact of Human-AI Collaboration on Open Source Intelligence (OSINT) Investigations of Social Media Disinformation” project.